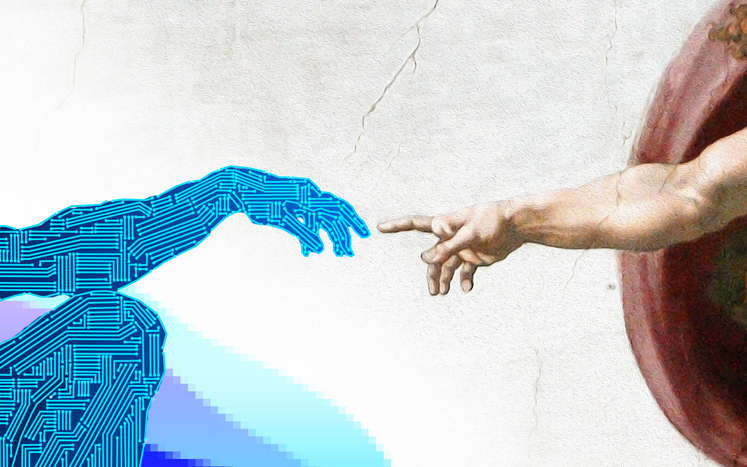

GenAI vs. DevOps: Decoding the Love-Hate Relationship

- By Winston Thomas

- May 01, 2023

To the layman, coding is like an assembly line. Rows of programmers (reinforced by movies that depict rows of programmers’ desks) make up the vision.

So, it’s easy to see why many would see generative AI and its ability to code as making coding more efficient. Many believe it can reduce time-to-market and the need for humans to code. Its ability to churn out placeholder code can make the coding faster and more standardized.

Anyone in actual software development or DevOps knows this is not the truth. Sara Faatz, director of technology community relations at Progress, also feels we’ve been here before.

“Every time we have a transformational point in development, people are quick to say, it's going to replace developers and engineers. It's not, but it is going to change how we do things,” says Faatz.

Changing the developer code

DevOps was created out of frustration. Patrick Debois, an agile practitioner and project manager, felt keeping software development and IT ops separate was inefficient — not when their end goal is the same. A conference named DevOps Days was organized in 2009.

During Debois’ time, quality assurance (QA) teams took months to test everything, with developers trying hard to fix bugs before the release date. The mad scramble led to rolling restarts, hotfixes and the general thinking that users should avoid the first release and wait for the more stable second one.

Over time, along with the publication of the State of DevOps by Alanna Brown of Puppet Labs and the inclusion of some Agile development practices, the idea of DevOps was born. Taking a page from manufacturing’s process optimization, it integrated product delivery, continuous testing, quality testing, feature development and maintenance releases for better security and reliability.

The result was a shorter time to market, better quality, improved productivity, and the ability to build the right product through quick experimentation. Generative AI can make this “factory line” more efficient. It can offer coding blocks, take over routine coding tasks, and help with near real-time quality testing.

But all these benefits overlook the fact that coding is both science and art. While it is easy to focus on science, art makes the difference between an efficient, agile, and beautiful code and one that needs a lot of rework.

This is where the case for generative AI in DevOps gets less defined.

Many models are trained in styles of coding that are generally found on the internet. The point is that programmers, over time, develop their style. But if their feedback is coming from a model trained on general styles, there is a chance it will impede learning or create a unique coding style.

Still, it's early days, and we’ve yet to realize the real impact of generative AI on DevOps. Faatz is also not dismissive of the role of generative AI in DevOps either. “AI can assist in ways people hadn't thought of before.”

Faatz also argues we’ve been looking at generative AI’s role the wrong way around. Instead of looking at it as an endpoint for DevOps, we should flip it around and see it as “a starting point.”

Writing the starting block

Generative AI is getting good at using context and creating the output based on the correct prompt. It can help to make the starting code or framework.

“But after that, a developer still will need to look at that code and say this is correct or incorrect,” Faatz explains.

ChatGPT and other generative AI offer an opportunity for the software development practice to decode and improve established practices.

Generative AI can also help in other ways, such as training. “It changes how we start to train the younger generation of developers,” says Faatz, who suggests that an AI assistant can become a virtual mentor in coding right.

Still, you need a lot of convincing to get programmers on the generative AI bandwagon.

“Developers can be argumentative, and I know a number of them who've had arguments with AI already. And in all seriousness, I don't think the models are there yet. But the one thing that I think is true about the spirit of the developer, in general, is that he or she also truly loves being on the cutting edge,” says Faatz.

Faatz also thinks that we cannot leave the role of generative AI in DevOps to individuals. Instead, she calls for the entire development community to get involved.

“So, do I think generative AI is there right now? No. Do I think that we, as a community, can help it get there? Absolutely. But that's also where things like ethics and taking into consideration the human side of software becomes key,” says Faatz.

She drew comparisons to the accessibility-first mindset that currently pervades software development.

“For a long time, people didn't think about accessibility when writing their code. We as the development community need to make sure that people are thinking about [accessibility] first and foremost and create that in their code from the beginning.”

The black box conundrum

We can understand how generative AI can help us code better. But can its use open you to software development lawsuits?

Take, for example, the case of Matthew Butterick and a team of lawyers. They are seeking a class action lawsuit against Microsoft’s Copilot, a tool that suggests ready-made blocks of code. Because the tool learned by ingesting billions of lines of code from the internet, Butterick alleged this was “piracy.”

So, what can software development teams looking to adopt generative AI do, especially those looking to use tools designed with available code on the internet but never really asked permission from individual contributors? And what if the model also learned from biased data sets leading to unconscious bias?

These are hard questions, but Faatz believes they are not just unique to programmers. She feels that society needs to tackle these questions upfront.

“I don't have the right answer for that. We're in such an early stage that most developers are still experimenting. It's when you start going into production and taking it beyond where” the challenges rise, she explains.

New agile mindset

When many talk about generative AI in DevOps, they focus on programmers. But the pressure on the heads of development to become generative AI savvy will be more acute.

The reason? “The younger generation will be trained with (generative AI) as the norm,” says Faatz.

She advises team leaders to experiment with generative AI now and set guidelines and guardrails. For her, the entry of generative AI in DevOps is just a matter of time, and team leaders must embrace this technology.

Another challenge is team dynamics. Making a young programmer schooled in generative AI sit next to a more experienced one without such experience can create friction. Yet, we need them to talk to each other to make efficient and reliable software time.

Team leaders also don’t have the luxury of time to get this dynamic quickly. That’s because end customers will demand quicker time-to-market as they assume that generative AI models will make software development leaner, less resource-intensive, and faster.

So can we use generative AI (or models closer to general AI) to lead software development?

“I don't know that [programmers] want to be answerable to an AI. Part of it is that developers are creating and responsible for this [generative AI] technology itself. So I think the answer will depend on how involved [the programmers] are in that process, and this will probably dictate who ends up being answerable,” concludes Faatz.

Winston Thomas is the editor-in-chief of CDOTrends and DigitalWorkforceTrends. He’s a singularity believer, a blockchain enthusiast, and believes we already live in a metaverse. You can reach him at [email protected].

Image credit: iStockphoto/Marcio Binow Da Silva

Winston Thomas

Winston Thomas is the editor-in-chief of CDOTrends. He likes to piece together the weird and wondering tech puzzle for readers and identify groundbreaking business models led by tech while waiting for the singularity.