The Edtech Sector Needs an Urgent AI Re-education

- By Yatish Rajawat

- July 17, 2023

Generative AI has generated more words than any recent technology product. More people have discovered its garrulity with words than any product in history. It has also led tech majors like Google to embed AI in new products.

One of the most affected sectors by AI is education because, either way you look, it is a content-based industry. The content could be assessment tests, learning content, or content that the education sector expects its customers (students) to create. Every organization in the education space is dealing with a large quantity of content. And content is where generative AI plays—its capacity to create text, video, and images has no equal in Tech-history. So how does this sector look at generative AI as part of its strategy?

During a three-city roundtable by the Google Cloud team, many insights emerged about the impact of generative AI on the education sector, specifically why learning providers need to appoint specialist Chief AI Officers.

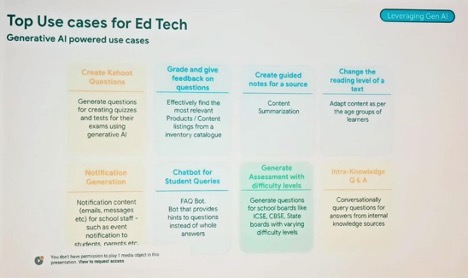

Google officials did a remarkable job presenting the use case of AI for various EdTech companies at the roundtable.

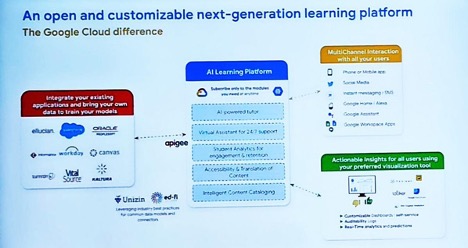

The end use of AI in Edtech is many, and Google officials pointed out many such opportunities. Google's AI-based learning platform already has several trained AI engines, which any EdTech can adapt by training it on their data.

The issue is not what AI or technology can do but what the organization is ready to do. And readiness is now a management issue inside an EdTech, which varies per their AI maturity.

3 points of view

There are three kinds of players based on their views of AI’s impact on the education sector.

Let’s call the first segment AI cynics as they feel that AI is hype, and they have seen many such hype cycles before this. After all, demand for their degrees remains robust, and they still command a premium in the market.

The second group, the AI skeptics, consists of companies with a few use cases for AI, are wondering how to apply them, and are not entirely convinced of the outcome.

The third category, the AIphiles, consists of digital natives who have adopted AI as a core part of their strategy.

Traditional education vs. AI-savvy students

AI cynics consist of education companies, universities, and even service providers to universities. They have a strong legacy reputation or brand and have built it over many decades. This segment has lived through several technological hype/trend cycles over the years and is cynical, with some justification, about buzzwords that promise to change their business.

Universities, colleges, and companies providing tech solutions to this segment still need deep knowledge of generative AI enterprise solutions. The impact is much more profound than anticipated, as generative AI is also changing the importance and the way this segment teaches many skills.

As student bodies start using generative AI tools in submitting every assignment and test, universities must develop systems to detect 'AI-based plagiarism' from ChatGPT and other generative AI tools. Even a university surrounded by a regulatory moat will be enormously disrupted by generative AI.

Like all cynics, AI cynics think they know everything but understand nothing, which is the challenge. They are the incumbents and believe the demand for their services is unlimited. Their leadership has mental frameworks that absorb information but rarely update the base mental model or regulatory thinking. Hence, they are good at detecting linear trends and patterns; they cannot see exponential changes or prepare for them.

Moreover, they compare every trend with one that they have seen in the past and ignore it. This is the same segment that felt the NEP does not impact them.

More importantly, the skills needed by this segment are changing. Skills like thinking, creative thinking, and collaboration have become more critical. These soft skills are hard skills in an AI age. They believe that AI is like cybersecurity or dot com or the other internet fads and will not change their fundamental approach to students or education. That is where they are so wrong, and that is why they need a chief AI officer to bring their top rung together on the impact of Generative AI.

AI’s role needs a serious thinking

The second kind of thinking is that AI skeptics believe that AI only impacts specific use cases. They are worried about the impact of AI, but not all of them think it has to be a core part of their strategy. Some think of it as a side hustle and that AI's adoption should be a case-by-case issue. They see no need to revisit their whole suite of offerings to the student from an AI lens. To these sets of companies, AI will have a peripheral effect. This is a technologist's, not a strategist's, way of looking at AI.

The AI skeptics’ limiting view applies AI to specific use case verticals without understanding the horizontal or macro impact of AI on the business. This is where a Chief AI officer is needed to bridge the gap between engineering and CXO leadership.

A considerable gap exists between the C suite and engineering everywhere–even among cloud service vendors. Engineers are far ahead of sales teams in understanding the impact of AI on their ecosystems; however, sales teams or businesses are struggling to understand how artificial intelligence will affect their organizations, solutions or strategies.

This becomes especially important as Google has developed a whole learning platform specifically to change how this segment launches its products or uses AI.

AI use case clarity lacking

The third and last bucket, native digital Edtech, has an advantage over the others in that its promoters are tech-driven and understand the impact of AI. They know they cannot leave AI to create data models that can wipe out their businesses. These companies have promoters/owners who are talking directly to the engineers at the various cloud vendors and demanding to run their models.

Here, the concern is data integrity, privacy, and, of course, pricing. While vendors promise data integrity and, in some cases, even portability or transfers in typical situations, the issue must be explained to customers much better.

For example, they will insist on understanding what happens if they have trained an AI engine on their data to create a new AI model and decide to shift: can they shift the whole model or just the data? What happens to the learning or training done on their proprietary data?

Their biggest fear is that the cloud vendors will eventually emerge as their biggest competitors as they have AI engines that will be well-trained on several diverse data sets. These companies' biggest fear is a hegemony of tech platforms providing Edtech solutions.

The case for CAIOs

Interestingly, deciding on an AI solution is not just a CTO or an engineering head task; the topmost echelon must do it. It would help if there is a Chief AI officer in the organization to help the CEO/ CFO/ CTO decide to adopt AI, but if not, the selling approach needs to change.

Unlike typical cloud offerings, which are sold more on cost and data integrity perspective, AI solutions are more of a concept sell and need better training to convince the buyers. Moreover, the customers also need to understand the horizontal layer of the AI solution better, as its offering to the end customer will change in the near future.

The future is here, but not every education company is willing to accept it fully.

Yatish Rajawat is the founder of Centre Innovation in Public Policy, a think tank based in Delhi. His area of research includes everything digital affecting policy, people, and the biosphere. Feedback or contact at [email protected].

Image credit: iStockphoto/lakshmiprasad S; Screenshots: Yatish Rajawat