Operationalizing ML Models for DevOps and ML Engineers

- By Red Hat

- February 06, 2024

Building and maintaining ML models can be a complex labyrinth. Each step presents a hurdle in data prep, model development, deployment, and monetization. The good news? MLOps offers a map to navigate these challenges efficiently.

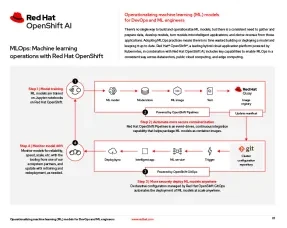

This whitepaper explores how Red Hat OpenShift AI, powered by the leading hybrid cloud platform Red Hat OpenShift, simplifies your MLOps journey. Discover how it empowers both DevOps and ML engineers to:

- Build MLOps into your workflow: Seamlessly integrate MLOps practices across development, operations, and data science teams, eliminating inefficiencies and wasted time.

- Accelerate with OpenShift: Leverage the power of Kubernetes and OpenShift's containerized approach for agile and consistent model development and deployment across diverse environments.

- Unlock value with OpenShift Data Science: Access a comprehensive suite of data science tools and services, all integrated within the OpenShift platform, to streamline data preparation, model development, and deployment.