Derisking the AI Worker

- By Michele Goetz, Principal Analyst, Forrester

- January 11, 2018

When you get over the fear of a robot taking over your job – because if you see our robots today they are still pretty dumb – your next big concern is how these new workers are going to perform. It’s not just a training question, it’s a management question. We all know the risk is from not understanding how AI workers are making decisions and why they take the actions they do. Or, we may but it is too late.

For those that are working on sophisticated POCs or contemplating how to bring their POC results into their business, transparency to derisk the robot is everything. Otherwise, we enter the next AI winter.

Philosophically, I think humans are more of the unknown. You never really know how a person comes to a conclusion and why they took the actions they did. In many cases, even the person can’t completely understand or communicate this either. We call that intuition, habit, a leap of faith. But, we do look at an employee’s performance over time and at critical moments to see how they work through problems, behave, and execute on strategy and tasks. The outcome of their performance tells us a lot about the value an employee brings to the organization. This provides us confidence in our workforce and even our executive leaders.

Why not take the same approach to the AI worker?

We put so much effort in trying to head off adverse events that it can slow our AI projects down or keep them from going online. And clearly, regardless of the number of experts brought in to train the AI worker humans can’t imagine every scenario this worker will encounter.

- What if we take an approach that allows the AI worker to learn – always and not just up front?

- What if we had manager cockpits to oversee AI worker behavior and performance in real-time with real-time alerts of abnormal or deviant behavior?

- What if machine learning was an integral component of applying the right management, mentorship, and governance over our AI workforce to alert, train, quarantine, and promote?

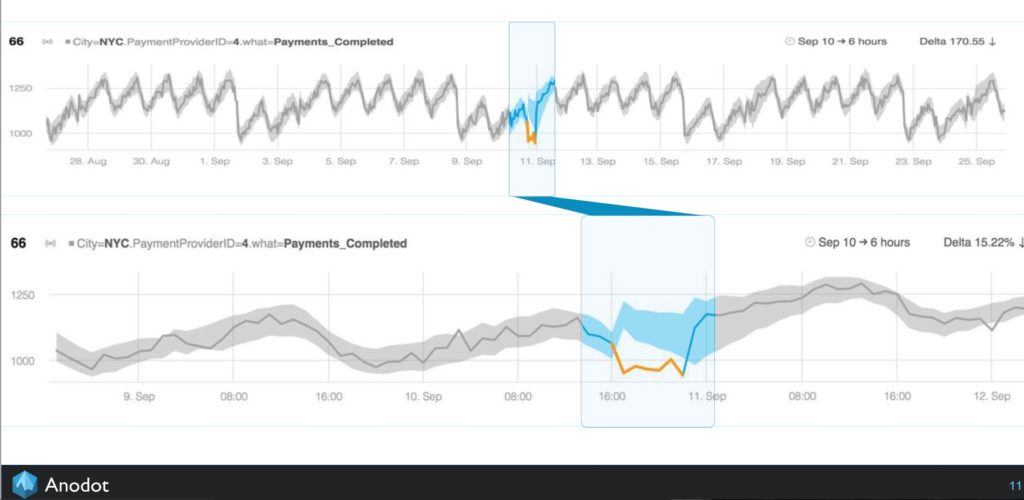

I had a briefing with a company called Anodot. They use machine learning to inspect and detect anomalous data, events, and behavior without having to set thresholds. Anodot’s analytics understand and interpret what normal looks like and alerts when those lines are crossed in real-time. They analyze e-commerce, utility, adtech and payment processing activity. A light bulb went off as I looked at some visualizations that this insight is not only powerful for our everyday transactional analysis, but it is exactly the type of insight and oversight needed for the AI worker.

And, that’s not too far off the mark. Talking with defense companies, product development consultancies, POC teams with consortiums, and EY and they are all working on how to use AI to be the manager’s eyes and ears over their new AI workforce. Some think this is where the real business of AI will emerge to derisk AI.

This article is from a Forrester blog. The original content is here.