Distributed, Scalable Data for Operationalizing AI: ASEAN Insights

- By Karen Kim, Human Managed

- March 18, 2024

The progress in AI, spearheaded mainly by tech giants, open-source communities and new players, has made a new generation of AI-driven products and services accessible to any business that wants them. Since cloud computing, many call it the newest 'inflection point' in technology. Pilots, initial development and initial launch of AI apps for specific use cases have never been more accessible.

A study by Kearney predicts that AI has the potential to contribute USD1 trillion to the ASEAN economy by 2030.[1] Governments and businesses recognize the potential of AI to drive innovation, fuel growth, and improve customer service. In Singapore, AI is estimated to add 18% or USD110 billion to GDP, while the Philippines will supposedly benefit with a 12% or USD92 billion lift.

But adoptable examples of operational AI with reusability, scalability and adaptability—especially in enterprise settings across multiple domains—are still limited.

So, what does operationalizing AI in ASEAN businesses look like? What enterprise problems can AI be applied to, especially those operational ones that keep businesses running and generating revenue?

This was the focus of the knowledge-sharing session organized by Human Managed, in which our customers and partners shared their perspectives within their unique contexts. Experts from SEA’s financial and technology services enterprises, including Aboitiz Group, BancNet, and Microsoft, provided insights on the 3 'Ops' with the highest impact and value for businesses in the age of AI: DataOps (feeding AI), MLOps (tuning AI), and IntelOps (applying AI).

DataOps: Feeding AI

The first section of DataOps brought the focus to ground reality amidst AI's overwhelming hype and promises—what working with data daily to secure and scale a business is like.

I asked our speakers and guests to share, "What is the day-to-day data problem you have in operations?"

Their answers could be summarized into five buckets:

- The visibility problem: Charmaine Valmonte, CISO at Aboitiz Group, said, “It's difficult to know what you have and track your business's changes consistently. Without a current and accurate view of your assets, their posture and behaviors, you are running blind.

- The verification problem: Kerwin Lim, security operations head at Aboitiz Group, shared, “Data outputs generated by tools and solutions are not always trustable or right. A human's role is to analyze data and apply context and other perspectives critically. However, verifying data and removing false positives remains manual, repetitive, and time-consuming.

- The foresight problem: The head of information and cybersecurity at a global bank observed, “Accurately predicting what is likely to happen from data analysis is desirable but very challenging to achieve because you need large volumes of reliable data, effective models, and quick feedback loops.”

- The prioritization problem: Jean Robert Ducusin, information security officer at BancNet, shared, “With data, events, and alerts being generated everywhere, it takes time to prioritize what is essential and decide what to do at speed.

- The orchestration problem: Saleem Javed, founder of Human Managed, said, "Enterprises are struggling to bring together all technologies and tools into cohesive operational pipelines to detect, react, and respond, especially with the volume and velocity of data increasing.”

Catch Aboitiz Data Innovation's chief operating officer, Guy Sheppard, as he talks about "The AI Future of FSIs" at the upcoming FSI & AI Singapore Summit 2024. To register or find out more, click here.

MLOps: Tuning AI

After establishing the ground reality from a data perspective, we zoomed out to see the world of opportunities and possibilities from an AI perspective.

In the MLOps section, Jed Cruz, a data and AI specialist from Microsoft, shed light on the fast-evolving landscape of AI, powered by tremendous advancements in foundation models, including large language models. Businesses are spoiled for choices regarding plug-and-play AI tools and features that solve specific problems or tasks, such as document and meeting summaries, code generation, or natural language chatbots.

However, operating AI at scale across multiple use cases and business processes requires custom-trained models. This is where businesses can unlock competitive and differentiated value from AI rather than use a ready-made solution that everyone else can access. However, this is also where most MLOps and LLMOps challenges occur, such as:

- Choice paralysis: with AI developments evolving so quickly, deciding what direction to take and what models to use is challenging.

- Lack of technical expertise: even after identifying the use cases and models for AI application, building the pipelines and productionizing requires deep technical knowledge, not to mention the capacity for experimentation (which will come with failures).

- Siloed data flow: typically, in an enterprise, data generation and management are siloed, which leads to isolated and limited decisions. To achieve holistically contextualized intel from data, tracking the data lineage in its raw form is essential to train the AI models. However, this is challenging to accomplish as data needs to be integrated.

- No context models: Generic or foundation AI models, no matter how advanced, will not magically produce accurate and precise outputs suited for your unique business context. For machine learning to be operational, AI models must be trained, tuned, and improved with data, logic, and patterns unique to your business.

- Feedback loop: AI models only improve with proper feedback, so decisions must be made about who will give the feedback and the associated mechanism. There is also a responsibility to check for and reduce bias and prejudice.

IntelOps: Applying AI

Even when you make headway with the DataOps and MLOps problems, one big piece of the puzzle is left in operationalized AI: presenting and serving the AI outputs to the correct recipient (humans or machines) at the right time. While the outputs of DataOps (insights & intel) and MLOps (labels) are important, what is also essential—but often deprioritized—is how they get generated, delivered, and tuned to be usable across the business.

At Human Managed, we call this continuous process of data-to-intel, intel-to-labels, and labels-to-serving pipelines: IntelOps. To get the most out of AI in your day-to-day business processes, it's crucial to build in the specifics of the distribution of labor between humans and machines.

- What Human or AI processes analyze what type of data and use cases? (Logic, model)

- What Human or AI processes generate outputs (insights, recommendations)?

- What Human or AI processes do you use to execute actions? (Functions, tasks)

- Finally, how and where do you present the AI outputs? (API, report, notification)

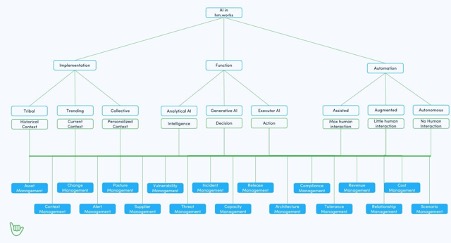

We shared how HM will apply AI across all of our services. There is no one mode of AI; it will be different based on the type of implementation, function and the level of automation required.

Conclusion: The AI game is one of data, context, and processes

We cannot know what the future of AI holds, but one thing for certain is that there will be more data, not less. Everything is a data point that could be analyzed if you want it to be. But to what end? The human capacity and process improvements are not increasing sufficiently to keep up with the technological developments and expected outcomes, so we need to prioritize the data problems to solve. Working with data is a given in today's operations. However, many enterprises still need to apply data ops at scale to contextualize and train operational AI models across crucial business processes.

The real challenge is making data, models, and their outputs operationalized and always production-ready—not just once, but every day—with limited resources.

The good news is that these problems can be solved; many have been broken down and solved by different ecosystem players and domain experts, bringing new innovative products and services to the enterprise industry. This shifts the technology service and partnership models as we know it and makes data and AI platforms a lot more accessible to enterprise customers than ever before. Like cloud computing distributed infrastructure and software, today's AI developments further break down and distribute AI functions (analytical, generative, model).

The AI game is one of data, context, and processes. We believe that the companies that continuously build the context of their business as distributed and scalable data (instead of tribal knowledge in individuals' minds) and work with a distributed ecosystem of partners and suppliers will be the ones that grow with AI instead of constantly playing catch up.

The views and opinions expressed in this article are those of the author and do not necessarily reflect those of CDOTrends. Image credit: iStockphoto/champpixs

Karen Kim, Human Managed

Karen Kim is the chief executive officer of Human Managed.